I use AI coding tools extensively in my daily development work, and they are absolutely powerful allies. This week I read a paper and watched a presentation that really made me think about how I use these tools. The first was a study by researchers at METR that should make every developer pause and think. In the study, they recruited 16 experienced developers from major open-source repositories (averaging 22k+ stars, 1M+ lines of code) and ran a controlled experiment.

Here’s the sneak preview that made think:

Developers took 19% longer to complete tasks when using AI tools.

I read that and said: Wait, what? Even more revealing? These developers expected AI to make them 24% faster, and even after experiencing the slowdown, they still believed they had been 20% faster. The perception gap is staggering. We know developers are notoriously bad at time estimates, but this is something entirely different, this is about fundamental misperception of their own productivity while using tools they think are helping them.

The Volume Trap

AI tools excel at generating code fast. Really fast. Sometimes Claude becomes like a wild horse that I can’t control; within a minute, I’ll see multiple files like xxx_enhanced.py, xxy_v2_really_good.py, and explanatory notes in WHAT_I_DID.md files scattered across my project. I started wondering if this volume-over-quality problem was just me, so I looked into it. Turns out GitClear analyzed 211 million lines of code and found some concerning trends:

- 8x increase in duplicated code blocks (5+ lines) in 2024

- 46% of code changes are now new lines vs. thoughtful refactoring

- 17% decrease in code reuse patterns

So we’re definitely generating more code, but at what cost? The productivity metrics that VCs love (lines of code per hour, features shipped per sprint) miss the iceberg below the waterline. When I dug deeper into the METR study, I found exactly where all that “saved” time actually goes:

The Acceptance Reality:

- Developers accept only 44% of AI generations

- 75% of developers report reading every line of AI-generated code

- 56% of developers report needing to make “major changes to clean up AI code”

- 9% of total time spent just reviewing and cleaning AI outputs

This changes how developers actually spend their day. Instead of the seamless productivity boost we expect, the time breakdown looks like this:

- Less time actively coding and problem-solving

- More time reviewing AI outputs and fixing problems

- More time prompting AI systems and waiting for generations

- More time idle - actually sitting around doing nothing, which increases context switching

Now, this shift in how we work is somewhat expected. We’re in the early stages of integrating AI into development workflows, and we’ll definitely see improvements over time. The tools will get better at understanding context and generating more accurate code. There’s no going back to developing like we did 10 years ago. But right now, the math is still brutal: If only 44% of AI generations are usable, and you spend 9% of your time cleaning up the ones you do accept, where’s the productivity gain?

And that’s just the immediate costs. The downstream effects are even more concerning:

- Technical debt accumulation at unprecedented rates

- 7% of AI-generated code gets reverted within two weeks (double the 2021 rate)

- Debugging time increases as developers spend more time fixing AI-generated code

- Code review overhead grows as teams struggle with unfamiliar patterns

The path forward isn’t using AI less, it’s using AI more thoughtfully, based on what the research actually tells us about where it succeeds and fails.

The Task Complexity Reality

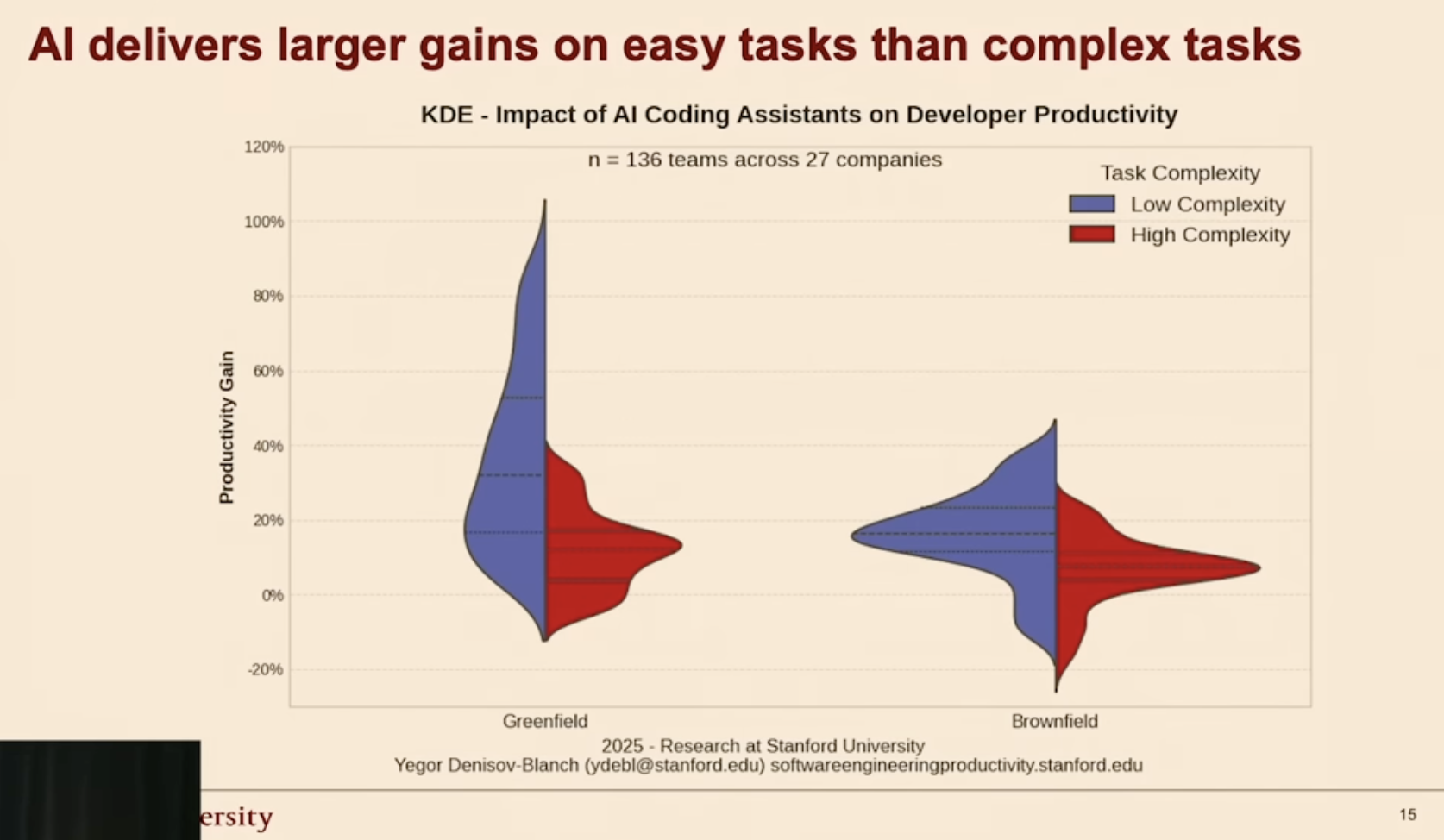

The second one is presentation from Stanford. They reveal AI’s code generation limitations clearly: it delivers larger gains on easy tasks than complex tasks. The productivity distribution shows a stark divide between low and high complexity work. Especially following two charts what I like to emphisze:

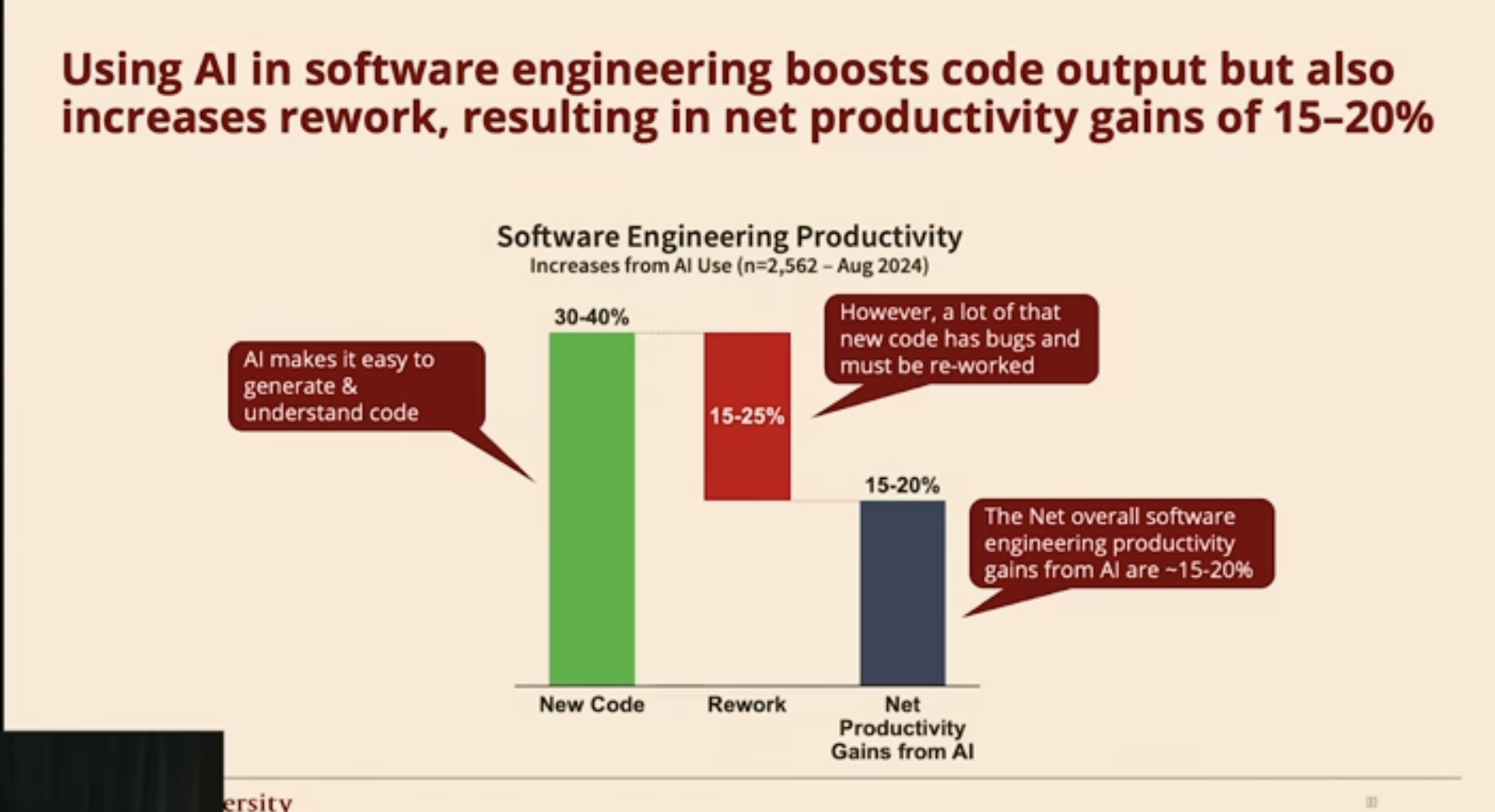

This chart perfectly illustrates the volume problem. It shows:

This chart perfectly illustrates the volume problem. It shows:

- 30-40% increase in new code generation - AI is indeed very good at pumping out code

- 15-25% increase in rework - but a significant portion of that code has bugs and needs to be fixed

- Net result: only 15-20% productivity gains - Still good

This validates that raw code generation doesn’t equal productivity. The chart literally shows that while AI generates much more code, the rework overhead eats into those gains significantly.

Greenfield Projects:

- Low complexity tasks: Massive productivity gains (up to 100%+)

- High complexity tasks: Much more modest gains

Brownfield Projects:

- Both low and high complexity: Much smaller gains overall

- Some teams actually experience negative productivity (slowdowns)

This directly supports that about experienced developers on mature codebases. The “Brownfield” category (existing, complex projects) is exactly what the METR study examined, experienced developers working on large, mature repositories. The key insight: AI shines on simple, greenfield work but struggles with complex, real-world codebases. Yet most enterprise software development happens in the brownfield category; maintaining and extending existing systems with complex requirements, legacy constraints, and high quality standards. This explains why the marketing demos (usually simple, isolated examples) don’t match real-world experience for senior developers working on production systems. But here’s the catch: the easy tasks aren’t the bottleneck in most engineering teams. You can also check this post that I like: Writing Code Was Never The Bottleneck

The Learning Curve That Nobody Talks About

After processing all this information, I think the real issue becomes clear. Developers are under pressure from three directions:

Managers want speed: “Can’t we just use AI to ship faster?”

Customers want cheaper: “Your competitor quoted 50% less because they use AI.”

Investors want efficiency: “AI should reduce your engineering costs.”

This creates a perfect storm where teams adopt AI tools not because they improve outcomes, but because they appear to improve the metrics that matter to stakeholders who don’t read code. As someone who uses AI tools extensively, I can tell you they’re incredibly powerful when used correctly. But there’s a critical learning curve that the marketing glosses over.

The Pattern I’ve Observed:

Week 1-4: Developers treat AI like a magic code printer. Copy-paste everything it generates. Feel incredibly productive.

Week 5-8: Reality sets in. Bug reports increase. Integration becomes painful. Code reviews take longer because the generated code doesn’t fit the existing patterns.

Month 3-4: Two paths emerge:

- Some developers invest time understanding how to give AI better context, clearer requirements, and proper boundaries

- Others continue the copy-paste pattern, accumulating technical debt

Month 6+: The developers who learned to work with AI see genuine productivity gains. The others are drowning in maintenance overhead.

The snowball effect kicks in when teams don’t invest in understanding these tools. Each poorly integrated AI-generated piece makes the next integration harder, creating a vicious cycle that eventually overwhelms the codebase.

Where AI Actually Helps

I’m a heavy user of AI coding tools, and they genuinely improve my work. But effectiveness depends entirely on how you approach them.

Where AI Excels (with proper context):

- Rapid prototyping and POCs AI serves as my prototype machine—generating different toy implementations to compare approaches, test tools, and conduct benchmarks before committing to production solutions

- Feature generation (almost boilerplate like CRUD operations, validations, etc.) when you provide clear patterns and constraints especially in backend projects where I can easily write comprehensive unit tests

- Discussing and learning new patterns and architectures Sometimes I miss the real opportunity and over-engineer solutions. Long discussion sessions with Claude really help me see multiple alternative approaches and flows.

- API integrations when you share existing code styles and error handling patterns

- Test scaffolding when you explain the testing strategy and coverage requirements

- Code explanation when you need to understand unfamiliar patterns quickly

Where AI Struggles (regardless of input quality):

- Complex business logic that requires deep domain understanding

- System architecture decisions that need long-term maintenance consideration

- Security-critical code where subtle vulnerabilities have major consequences

- Integration with legacy systems that have undocumented quirks

The key insight: AI tools amplify your existing knowledge and patterns. If you understand your codebase architecture, coding standards, and business requirements, AI becomes incredibly powerful. If you don’t, it just generates sophisticated-looking technical debt faster.

The Real 10x Opportunity

The most successful teams I’ve worked with don’t use AI to write more code, they use it to write better code. They leverage AI for code review, documentation generation, and test coverage improvement rather than complete/raw feature generation. And importantly, use them as AI fellow to discuss and learn. The 10x opportunity isn’t in generating code faster. It’s in shipping code that doesn’t come back to haunt you six months later.

AI coding tools here to stay. They don’t make developers more powerful, they simply free up bandwidth that was previously consumed by routine tasks. But they’re tools that require skill, understanding, and thoughtful application, not magic productivity multipliers that work regardless of how you use them. The most successful developers I know don’t use AI to replace their thinking; they use it to amplify their expertise. They spend time learning how these tools work, understanding their strengths and limitations, and developing workflows that leverage AI effectively while avoiding its pitfalls. The next time someone promises you 10x productivity from AI, ask them about the learning curve. Ask about the context requirements. Ask about what happens when you don’t invest time in understanding how to use these tools properly. That’s where the real conversation about AI and productivity begins: not in the promises, but in the practice.

—

Sources: